Robust active learning for next test recommendations

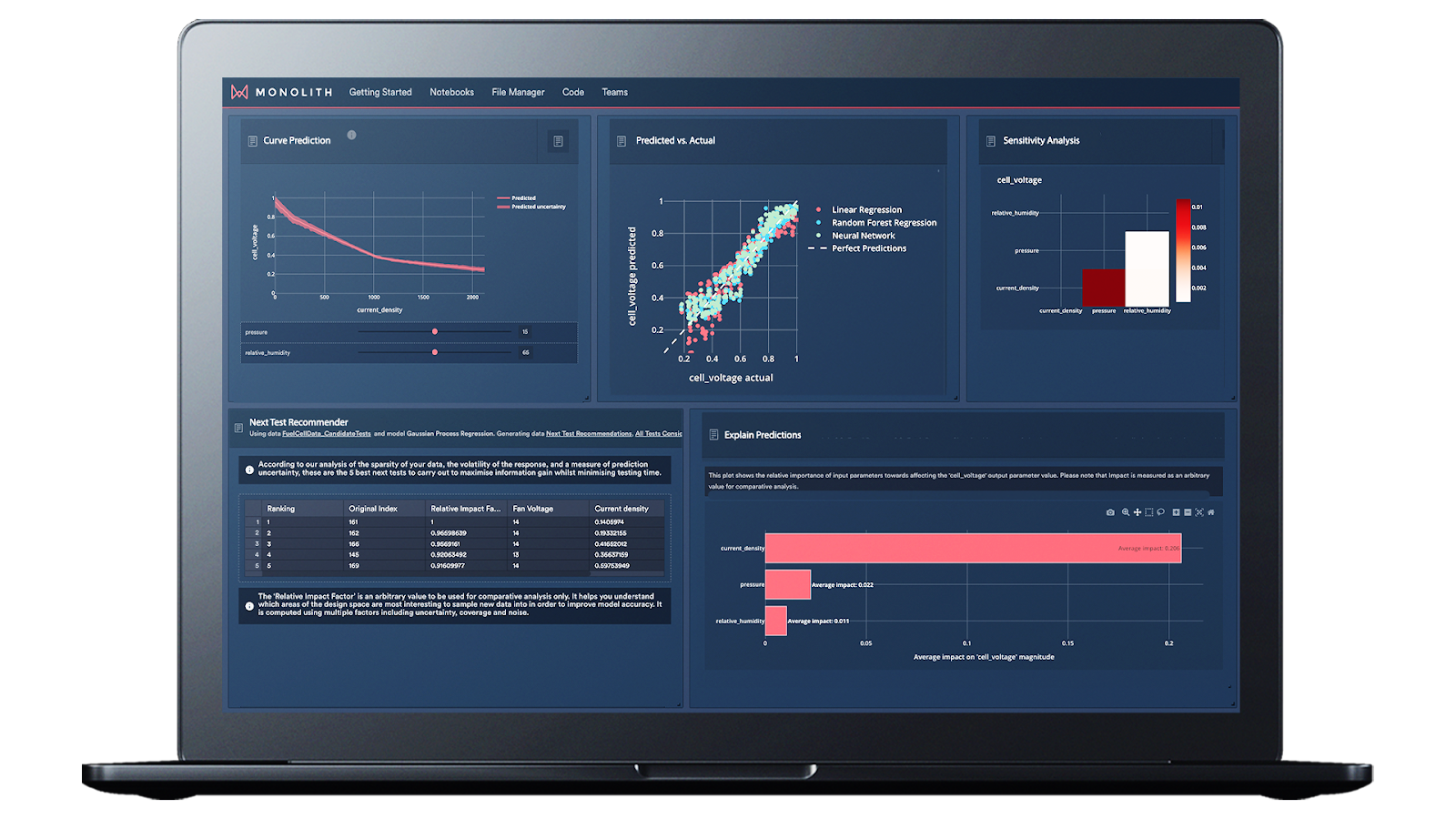

Preview of the Next Test Recommender by Monolith - Provides users with actionable recommendations for the exact best test conditions to choose for their next batch of tests. Ranks the most impactful new tests to carry out, based on an analysis of previously collected data.

Active learning: introduction

Physical and simulation testing in engineering are often time-consuming and costly, however, they are essential to understanding complex physics problems.

Engineers find themselves in a dilemma, either conducting excessive tests to cover all possibilities or running insufficient tests, risking the omission of critical performance parameters. Active learning optimizes this trade-off by selecting the most valuable experiments to run first.

In addition to this trade-off, incumbent global leaders in safety-critical industries like automotive and aerospace face fierce competition from new players, stringent regulations, and disruptive technologies. This puts them at risk of losing market share, incurring high costs, and damaging their brand reputation due to recalls and quality issues. To overcome these challenges, leaders need to expedite processes without compromising risk management.

By leveraging AI and machine learning, they can extract valuable insights, optimise designs, and identify crucial performance parameters accurately.

Integrating Monolith in your verification and validation process can enhance operational efficiency and streamline testing procedures, reducing reliance on excessive physical tests.

What is active learning?

Active learning leverages machine learning and other data science techniques, and is gaining prominence in automotive and aerospace product development. The main application is guiding engineers through testing plans more efficiently.

Instead of exhaustive testing, active learning acts as a "next test recommender," identifying tests that provide maximum information gain and avoiding redundant ones. While this approach can generate consequent time and cost savings, ensuring its robustness and effectiveness is crucial, especially in safety-critical industries.

Understanding the risks of active learning

In industries where safety is critical, such as automotive and aerospace, active learning algorithms must navigate diverse experimental designs and address the inherent variability in their performance.

A method that performs well on one dataset may not yield the same results on another. This variability poses risks when it comes to safety-critical aspects of product development.

As with all machine learning, it is therefore critical to apply and evaluate algorithms scientifically. For active learning specifically, the evaluation is often made harder by the lack of available ground truth data.

Monolith approach to robust active learning

To mitigate risk, the Next Test Recommender employs two approaches: aggregating diverse combinations of active learning methods and a human-in-the-loop review of the experiment selection and learning process itself.

The Next Test Recommender combines active learning methods, including multiple model-free, uncertainty, and disagreement sampling techniques. Combining multiple algorithms in this way increases the diversity of opinions and exploits the “wisdom of crowds” in a similar fashion to other machine learning techniques such as ensemble models, which combine the predictions from many different model types.

This reduces the risk that a selected single method may bias towards exploiting a single aspect of the data or model, or simply perform poorly for a given dataset.

Allowing human-in-the-loop inspection of the selected experiments grants a domain expert user oversight of the system, combining their expertise and domain knowledge with the power of machine learning.

Combining methods yields a more robust sampling strategy

While developing the Next Test Recommender, we ran many experiments on different active learning techniques on existing datasets and live simulations. Active learning approaches were compared against each other, and against a baseline random sampling method.

A small selection of results are shown in the figure below, from which the following conclusions could be made:

- Random sampling helps to ensure that the entire space is explored. This method reduces the risk of missing important areas of the test space, but the trade off is unnecessary sampling of less important areas of the test space.

- The uncertainty method shown here uses a machine learning model and an uncertainty metric to select the next tests to run, and provides an (unusually dramatic) example of the risks of doing active learning badly. It biases towards selecting experiments near the edges of explored space, and as a result misses other important areas such as the centre peak on the surface. The end result is more tests were required to discover the surface than with random sampling!

- The final approach combines multiple active learning methods, and best balances exploration and exploitation of the test space. It discovers the critical areas of the surface in fewer steps and with less data collected than random sampling.

Unlike random sampling (2 in the middle) and single active learning (AL) method (3, on the right), the robust combination of AL methods (1, on the left) ensures a safe space coverage and a focus on critical regions.

Combining learning outcomes & safety

Having a robust strategy for active learning is critical as it is often difficult to know ahead of time which will be the most efficient sampling strategy. It can be that for one test space, a particular uncertainty method is the most efficient while for another test space it may perform poorly.

A robust strategy should help users improve the active learning activities and outcomes in a safe manner, and this should be less impacted by the data that is considered or any single active learning method.

Conclusion: active learning strategies

Active learning enhances engineering processes by optimising data selection and test recommendations, contributing to safety and efficiency in product development.

The integration of robust active learning algorithms in our Next Test Recommender signifies a significant advancement in product development for safety-critical industries. By combining multiple active learning methods, we address the inherent variability in active learning performance across different datasets.

This knowledge and human-in-the-loop design empowers engineers to make informed decisions, optimise their test plans, and keep responsibility for continuously improving the safety, quality, and reliability of the final product.