Maximising Data Potential With Transfer Learning: A Comprehensive Guide

What if there was a way to maximise the potential of your data?

This is possible by utilising transfer learning techniques for machine learning (ML) applications and artificial intelligence (AI).

The popularity of AI is due in part to the ability to transfer knowledge acquired through learnings from similar problems.

You might not be able to predict the performance of a rocket based on the performance of your bike, but the physics of an old plane could help you explore the design of a new plane.

Learn how to prepare your data for transfer learning.

Initial Transfer Learning Considerations for Machine Learning Models

To do this successfully, one needs to pause and think carefully about how to set up both a ML representation learning algorithm as well as the data to be used in the transfer learning process.

This requires careful consideration of how to set up machine learning algorithms and data, but can allow for the use of past knowledge in exploring new designs and learning strategies.

In this article, we will explore what transfer learning is, the benefits of transfer learning for traditional machine learning models and applications, and case studies which show how engineering organisations are able to maximise their data through transfer learning, learning strategies, and pre-trained models.

What Is Transfer Learning?

Transfer learning is a machine learning technique where pre-trained models (which have already learned a range of patterns and very task-specific features from a large data set) are used as a starting point for training new models on a new task.

With transfer learning, a solid machine learning model can be built with comparatively little training data because the entire model is already pre-trained.

This is especially valuable in natural language processing, as typically, expert knowledge is required to create large labeled data sets needed for a trained model.

Additionally, model training time is reduced because it can sometimes take days or even weeks for transfer learning for deep neural networks from scratch on a complex task.

Transfer learning saves time and improves performance by leveraging the historic training data and knowledge gained from the pre-trained model.

What Are the Three Categories of Transfer Learning for Deep Learning Models?

There are three categories of transfer learning:

1) Inductive transfer learning

Where knowledge from one domain is transferred to another domain.

2) Unsupervised transfer learning

Where a pre-trained model is used to learn features from unlabeled data to improve performance on a new and/or related task (also known as multi-task learning for complex tasks).

3) Domain adaptation

Where a model trained on a source domain is adapted to perform well on a target domain by minimizing the distributional difference between the two domains.

Transfer Learning Benefits for Machine Learning Applications

Machine learning algorithms are only as good as the data on which they are trained.

By utilising transfer learning for machine learning, the accuracy and efficiency of these pre-trained models significantly increase.

For engineering organisations, this means better quality products faster with optimal pre-trained models.

One of the reasons why AI has grown in popularity so quickly is the fact that the knowledge acquired through learning can be "transferred" to a different problem.

Automotive engineers could use the knowledge acquired on an existing car to explore the design of a new car.

Similarly, the physics of an old plane could help aerospace engineers explore the design of a new plane.

The Importance Of Properly Preparing Your Data For Transfer Learning

In order to use transfer learning successfully, it is important to properly think and plan carefully about how to set up both the machine learning algorithms and the data (With a keen focus on how to set up pre-trained models).

To prepare your data for transfer learning, there are a few key steps you should follow.

Firstly, you need to identify a pre-trained model that is relevant for transfer learning to your new problem.

You can then use this pre-trained model as the starting point for your transfer learning.

Next, you need to prepare your data by cleaning and preprocessing it in preparation for creating deep learning models.

This may involve removing outliers, scaling the data, and converting it into the format required by the pre-trained model.

Finally, you need to fine-tune the pre-trained model to your specific problem by training it on your new data.

Read more about data sources for ML here.

This involves freezing the weights of the pre-trained layers and only training the new layers that you have added to the model.

Overall, preparing your data for transfer learning involves selecting a relevant pre-trained model, cleaning and preprocessing your training data, and fine-tuning the deep learning model trained to your specific problem.

By following these steps, you can leverage the knowledge contained in pre-trained models to solve new problems and achieve better results with less data.

The machine learning algorithms are only as good as the raw data, training data, or new data they are trained on, therefore it is important that this labeled data is properly prepared.

It is also important to point out the disadvantages of transfer learning, mainly domain mismatch, that can occur when the pre-trained model may not be well-suited to the second task if the two tasks are vastly different or the data distribution between the two tasks is very different.

Think "Next" For Transfer Learning

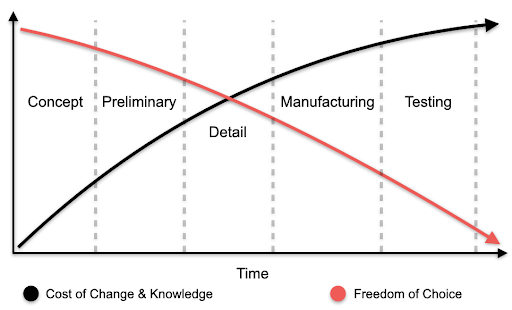

When initially setting up a machine learning model, it is essential to also consider the next design iterations and how they may be different from the current iteration.

In order to ascertain what future design iterations might look like, it is also essential to look back and see how the current design has changed from past ones, noting anything different.

In order to ensure that the inputs and outputs for current designs are relevant for future designs, it is important to think about the following:

What Will Vary in the New Design by Implementing Transfer Learning?

By understanding what is likely to vary or change in the new design, you are able to extract the relevant inputs and outputs for those future designs.

When thinking about a car, there might be variances in the type of material used for the bumper or the presence of a spoiler at the rear of the car.

There might be additional or different features on the wing of an aircraft, like the number of slats.

What Will Remain the Same by Implementing Transfer Learning?

Similarly, by understanding what will not change in any future designs, you can ascertain which inputs and outputs should remain the same.

A car will always have four wheels and a plane will always have two wings, so your labeled data should reflect this.

Apply The Same Transfer Learning Considerations for The Data You Want To Use

These considerations are also important for the data which you want to use as outputs for a machine learning model.

It is important to think about what results you are interested in, and the best format for these to be stored so that they can be reused by the machine learning model on new designs.

Whilst it is challenging to keep track of the best ways to differentiate the next design from the current iteration, it is also very rewarding.

It can seem difficult to have forethought about how future design iterations will change and sometimes even impossible (such as with components that greatly differ from one generation to the next).

However, it is always possible to think about what can be changed in the current design.

The reward of this is the ability to use pre-trained models which have been trained correctly with the right labeled data.

This leads to better quality products being produced faster thanks to the transfer learning process.

Avoid Label Classifiers and Prefer Continuous Numerical Value

Some information might presently be stored in a format that only makes sense for the current design iteration.

Some locations or other inputs can be coded in a way that restricts your ability to extend learning to new designs.

These categorical variables almost always hide other more useful numerical values.

If the labels can be translated into numerical values which describe how a design differs from other designs, the differences are expressed in a clearer way.

It should also be considered that if you come up new design iteration that requires a class your pre-trained model has never before seen, the model developed will not be able to make predictions.

A Working Example of Transfer Learning

As an example, instead of storing the location of an airplane engine on a wing as LOC_ENG = ABC.123.D (a made-up category), it is better to store the distance between the wing root and the engine as an explicit distance such as d=9.50m.

When done this way, AI would learn much more information about the connection between distance and performance, as well as be able to predict the outcomes of new and unseen engine-location categories.

A Transfer Learning Example For Material Properties

Another example for material properties would be instead of defining the material of a car component as AA6061 (standing for Aluminium Alloy 6061), you would learn more from material properties such as stiffness E=69 GPa and the strength S=200 MPa of that aluminium.

The standardisation and parametrisation of your designs, and their corresponding results, will also require a large amount of effort and thinking.

The long-term value of this effort cannot be understated, however.

It makes your data much easier to exploit for any kind of data processing you may want to do, not just for machine learning purposes or transfer learning models.

Transfer Learning Example: An Aircraft Wing

In this scenario, imagine that we have previously designed an entire model of an aircraft wing.

The main factors of designing an aircraft wing are aerodynamics, weight, strength, and manufacturability.

In the process of designing this aircraft wing, we have had to run many expensive simulations and experiments to know how the stress along the wing structure behaves.

The data which we have managed to collect in this process is the stress at different sensor station locations along the wing.

We know that we wish to train a model that can make new predictions for the same aircraft, but we also want to re-use this trained model for a new aircraft at some point in the future.

To highlight the importance of preparing your data for transfer learning, we will explore two possible ways to prepare the data, and how they impact the possibility of transfer learning in regards to a pre-trained model and transfer learning models.

Transfer Learning Possibility A: Without Any Data Preparation

In this example, the data we collected after designing the first aircraft (below, in blue) is not transformed in any way to lend itself to the creation of a pre-trained model.

There may be some “shared parameters” which will also apply to new aircraft designs, such as speed, altitude, and weight.

However, at least two issues may arise when training your machine learning models:

-

There could be “unshared parameters”. These are parameters which are too specific to a given design and therefore cannot generalise well to a new design. Examples include station numbers, or the station numbers closest to the engine.

-

There could be missing “differentiation parameters”. These are parameters that can quantify the difference between your designs, such as the full dimensions and shape of the wing.

Due to this, it is very unlikely that a model developed in the first aircraft (in blue) will be of any use to predict the results of the second, new aircraft pre-trained model (below, in red).

There are two main reasons for this:

-

Your trained model simply has a different number of inputs and outputs. This leads to issues such as what input values do you use for the missing or additional parameters? What values do you predict for the new outputs?

-

The model might make predictions that would only make sense for the first aircraft. As an example, as the load at the wing tip is always close to zero, the model will learn to predict a load of almost zero at station 30 for the first aircraft, but for the second aircraft, the load at station 30 will not be close to zero.

Transfer Learning Possibility B: With Data Preparation for Machine Learning

In the ideal case, all parameters are shared between the two designs.

This requires the data to be processed:

-

Station numbers and positions are converted in lengths and ratios, rather than being a “hard-coded” value

-

The “differentiation parameters” are added to make sure that the difference between different designs can be captured.

As a result of adequately preparing the data, the outputs are transformed to be useful.

Instead of predicting the stress at station number, the relative location along the wing can now be used.

Another solution would be to separate predictions between the stress between the fuselage and the engine, and between the engine and the wing tip.

This highlights the fact that training on a simple design will very rarely provide a good enough model to predict the results of a second, new design.

The impact of the wing length simply cannot be learned if the model has only seen data for a single value of the length.

By preparing the data for transfer learning, the model will now be able to learn the effect of this parameter as data from more designs are gathered.

Transfer Learning Conclusion: Future-Proof Your Models

By employing an AI solution now for your current bottlenecks, you will improve your understanding, gain valuable insights, and solve intractable issues faster than ever before, as transfer learning algorithms leverage task specific features and weights from previously trained models.

But by taking the time to think about how it can capture the evolution of the product over many iterations from the beginning, your model will provide you with far more value.

Whilst the new task of preparing your data for transfer learning can be a long learning process that requires a lot of effort, it is also vastly rewarding.

AI and transfer learning strategies are revolutionsing engineering, as well as how engineering teams can work smarter and bring products to market faster across a wide array of industries and sectors.

Companies who are actively learning about and implementing AI into their product development workflow are gaining a competitive advantage over those yet to adopt AI, as they develop better quality products in less time; through transfer learning strategies and beyond.

Discover how visionary engineers at Honeywell are utilising predictive self-learning models and transfer learning strategies to create smarter energy measurement solutions in the below case study.

Additionally, find out how Monolith, and previously trained models, can reduce testing, improve learning, and ultimately provide value to our customers here.