In an era where the accuracy and integrity of engineering data are paramount, the advent of AI-guided tools represents a significant leap forward.

AI-guided testing for engineers has unveiled an innovative suite of tools designed to transform the way engineering data is inspected.

While the promise of AI in enhancing data analysis is vast, engineers, grounded in empirical rigor, may question the reliability of AI interpretations and the potential for over-reliance on automated processes.

It's crucial, therefore, to underscore that these tools are designed not to replace but to augment the engineer's expertise, offering a symbiotic relationship between human insight and AI precision.

This blog post delves into how Monolith's Anomaly Detector helping engineers facilitate data inspection using Machine Learning algorithms. It will ensure more reliable, error-free data for building accurate machine-learning models.

Engineering data analysis: AI-guided anomaly detection

The precision and reliability of engineering data stand as critical pillars of innovation and efficiency. The introduction of AI-guided tools creates a new chapter in engineering practices, offering enhanced capabilities in data inspection and analysis.

Engineers face the daunting challenge of navigating vast datasets, where minor discrepancies can lead to significant, costly errors. Against this backdrop, our mission gains critical importance: providing engineers with advanced tools that ensure rapid identification and correction of data inaccuracies, thereby safeguarding the foundation of machine learning models.

Monolith's anomaly detector

The strength of the Anomaly Detector lies in addressing a pervasive challenge: test engineers spending inordinate amounts of time grappling with flawed data. Traditional methods—visual inspections and sensor-specific rules—are not only inefficient but often fail to capture the subtleties of data anomalies.

This inefficiency is a significant hurdle, detracting from the core objectives of engineering tasks and leading to potential misinterpretations and erroneous conclusions in data-driven decision-making.

The Anomaly Detector is unique in the data inspection domain, embodying the seamless integration of AI and engineering acumen. It's not just about identifying errors faster; it's about redefining the entire workflow of engineers, enabling them to allocate their expertise where it's most needed, while AI takes on the meticulous task of data scrutiny.

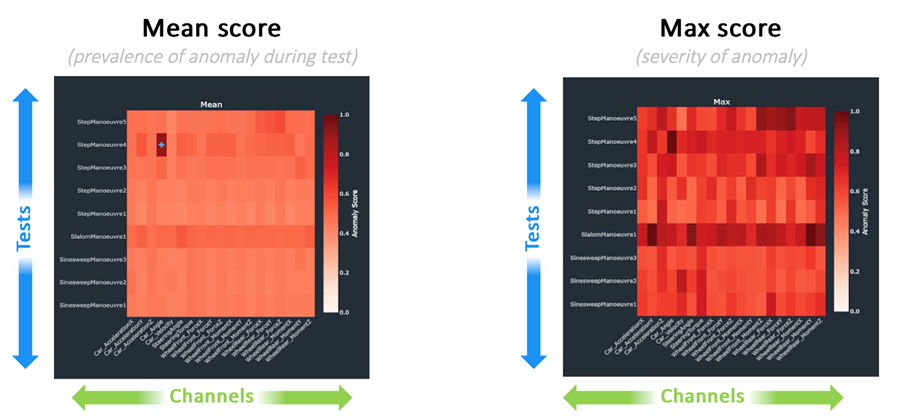

TDV with Anomaly Detection - Anomaly score visualisation: Heat Maps of tests vs channels.

At the technical core of the Anomaly Detector is a sophisticated unsupervised learning algorithm that offers a granular analysis of engineering data. This tool doesn't just flag anomalies; it provides a nuanced ranking of sensors and test runs, highlighting potential areas of concern.

Engineers can delve into the specifics of each anomaly, distinguishing between localised sensor issues and broader systemic behaviours, thereby gaining a comprehensive understanding of the data's integrity.

The true value of the Anomaly Detector lies in its capacity to augment the engineering workflow rather than disrupting it. Automating the detection of data quality issues empowers engineers to trust their datasets, ensuring that their analyses and models are built on solid foundations.

This shift not only streamlines the testing process but also mitigates the risk of downstream errors in analytics and modelling, catalysing efficiency and innovation in engineering projects.

Applicability

The application of the Anomaly Detector is vast and versatile, particularly suited to environments where sensor data is paramount. In sectors like automotive testing or industrial monitoring, where dozens or even hundreds of sensors are in play, the ability to swiftly pinpoint and rectify anomalies is invaluable.

This tool is a critical ally in the quest to optimise data reliability, ensuring that engineers can focus on their primary objectives without the looming concern of underlying data errors.

See AI-guided anomaly detection in action

The webinar with Dr. Joel Henry and John Pasquarette demonstrates how common data errors in testing and validation labs can be identified early gaining insights into the challenges that plague data integrity.

More importantly, you will understand how to train and apply the Anomaly Detector, equipping you with the knowledge to leverage this tool in your own data analysis endeavours.

Conclusion: A new era in engineering data analysis

As the engineering sector continues to evolve, the demand for accurate, error-free data will only escalate. With Monolith's AI-powered solutions, engineers are now better equipped than ever to meet this demand, ensuring that the data that forms the backbone of their work is as reliable as it is insightful.

In this blog post, we've explored how Monolith's Anomaly Detector is set to revolutionise the way engineers inspect and validate their data. By automating the detection of errors and anomalies, Monolith is not just enhancing data integrity; it's reshaping the landscape of engineering innovation.